Abstract:

AI workloads like LLM serving are inherently dynamic. Elastic systems that dynamically scale computing resources for the current workloads can better harness resources, though this comes with performance overhead—for example, serving requests must wait for resources to be provisioned before processing. Traditional fast scale methods face two fundamental challenges with AI workloads: they only support CPU, and their on-demand loading optimizations fail for model loading since most models require full parameter loading before provisioning. This talk will focus on our recent work on accelerating resource provisioning for AI workloads. Our first system, PhoenixOS, can bootstrap a Llama-2 13B inference instance on one GPU from scratch in 300ms, which is 10–96× faster than state-of-the-art counterparts like cuda-checkpoint. Our system BlitzScale further improves serving performance without requiring loading a full copy of parameters, and does not modify how the model inference process works (e.g., no early exit) through a model-system-network co-designed model loading.

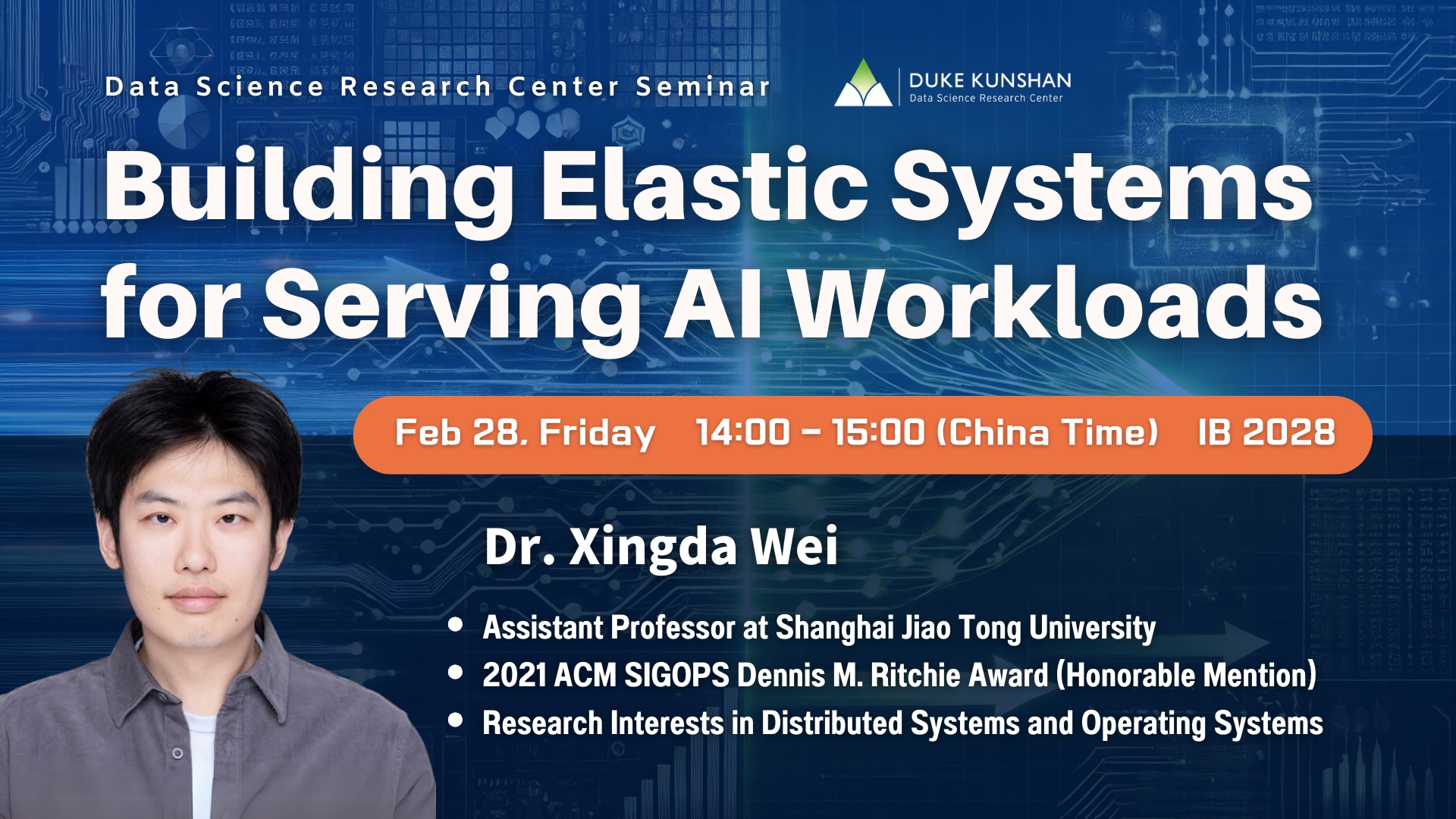

Biography:

Xingda Wei is a tenure-track Assistant Professor at Shanghai Jiao Tong University. His main research interests are distributed systems and operating systems, with recent focus on improving the performance, reliability, and resource efficiency of system support for AI. He has published papers in conferences including OSDI/SOSP, Eurosys, and NSDI. He has received awards including the Eurosys 2024 Best Paper Award, 2022 Huawei OlympusMons Award, and 2021 ACM SIGOPS Dennis M. Ritchie Award. His doctoral dissertation received a 2021 ACM China Distinguished Doctoral Dissertation Award nomination and the ACM ChinaSys Outstanding Doctoral Dissertation Award. He serves on the program committees of multiple leading system conferences including OSDI, ASPLOS, NSDI, and as the program committee chair of ACM ChinaSys.