Audio, Speech and Language Processing include speaker recognition, speaker diarization, speech synthesis, voice conversion, speech separation, key word spotting, speech recognition, speech enhancement, language identification, paralinguistic speech attribute recognition, etc. More than 100 top conference or journal papers have been published in this field. Collaboration with multiple industry leaders and local companies are ongoing in terms of collaborative research and technology transfer.

Multimodal Behavior Signal Analysis and Interpretation have ben conducted towards the AI assisted Autism Spectrum Disorder (ASD) diagnose and intervention. An AI studio is developed for the early screening of ASD. The studio’s four walls are programmable projection screens that can recreate a variety of settings, such as a forest environment, with sound delivered through multichannel audio equipment. The therapist can use the studio to interact with the child, such as asking him or her to point at a certain object projected onto the wall to observe their reaction. At the same time, cameras capture the movements of the child and the therapist, including gestures, gazes and other actions. The studio is equipped with more than 10 technologies that have obtained or are in the process of obtaining patents. These include technologies that assist with gaze detection, human pose estimation, face detection, face recognition; emotion recognition, speech recognition, speaker diarization, paralinguistic attribute detection and text understanding.

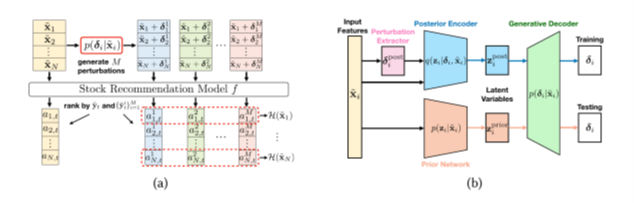

Machine learning (ML) and Deep neural networks (DNNs) have achieved great success in many applications. However, recent research investigations show that DNNs are vulnerable on small perturbations of input data or have low generalizations over new samples, making them less trustable to be applied in real scenarios. In this research, we aim to establish a systematic framework to build up robust and trustworthy ML models that enjoy excellent generalization abilities on future/unseen data. Both theoretical and practical explorations will be conducted from the perspectives of adversarial training and data augmentation. So far, our research has been published in premier ML journals/conferences including MLJ, TOIS, ICML, WWW, ICCV, NeurIPS, and AAAI.

Fig. Robust adversarial training (b) can help recommend ‘trustworthy’ stocks or rank different stocks according to their risks (a). More details can be found in our work published in TOIS 24.

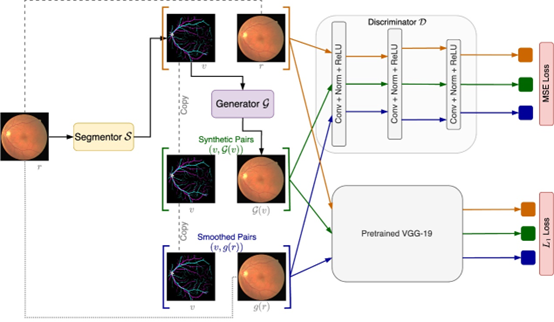

In recent years, advancements in retinal image analysis, driven by machine learning and deep learning techniques, have enhanced disease detection and diagnosis through automated feature extraction. However, challenges persist, including limited dataset diversity due to privacy concerns and imbalanced sample pairs, hindering effective model training. To address these issues, we introduce the Vessel \& Style Guided Generative Adversarial Network (VSG-GAN), an innovative algorithm building upon the foundational concept of GAN.

In VSG-GAN, a generator and discriminator engage in an adversarial process to produce realistic retinal images. Our approach decouples retinal image generation into distinct modules: the vascular skeleton and background style. Leveraging style transformation and GAN inversion, our proposed Hierarchical Variational Autoencoder (HVAE) module generates retinal images with diverse morphological traits. Additionally, the Spatially-Adaptive De-normalization (SPADE) module ensures consistency between input and generated images.

We evaluate our model on MESSIDOR and RITE datasets using various metrics, including Structural Similarity Index Measure (SSIM), Inception Score (IS), Fréchet Inception Distance (FID), and Kernel Inception Distance (KID). Our results demonstrate the superiority of VSG-GAN, outperforming existing methods across all evaluation assessments. This underscores its effectiveness in addressing dataset limitations and imbalances. Our algorithm provides a novel solution to challenges in retinal image analysis by offering diverse and realistic retinal image generation. Implementing the VSG-GAN augmentation approach on downstream diabetic retinopathy classification tasks has shown enhanced disease diagnosis accuracy, further advancing the utility of machine learning in this domain.

To ensure high quality and yield, today’s advanced manufacturing systems are equipped with thousands of sensors to continuously collect measurement data for process monitoring, defect diagnosis and yield learning. In particular, the recent adoption of Industry 4.0 has promoted a set of enabling technologies for low-cost data sensing, processing and storage of manufacturing process. While a large amount of data has been created by the manufacturing industry, statistical algorithms, methodologies and tools are immediately needed to process the complex, heterogeneous and high-dimensional data in order to address the issues posed by process complexity, process variability and capacity constraint. The objective of this project is to explore enormous opportunities for data analytics in the manufacturing domain and provide data-driven solutions for manufacturing cost reduction.

In this project, close collaborations have been made with leading enterprises in domestic industry. By using the sales and logistics data, we provide customers with guidance on pricing and discounts on all category of products. The project is combined with new retailing, using data-driven methodology for all aspects from production to sales, and providing advice on enterprise data management.

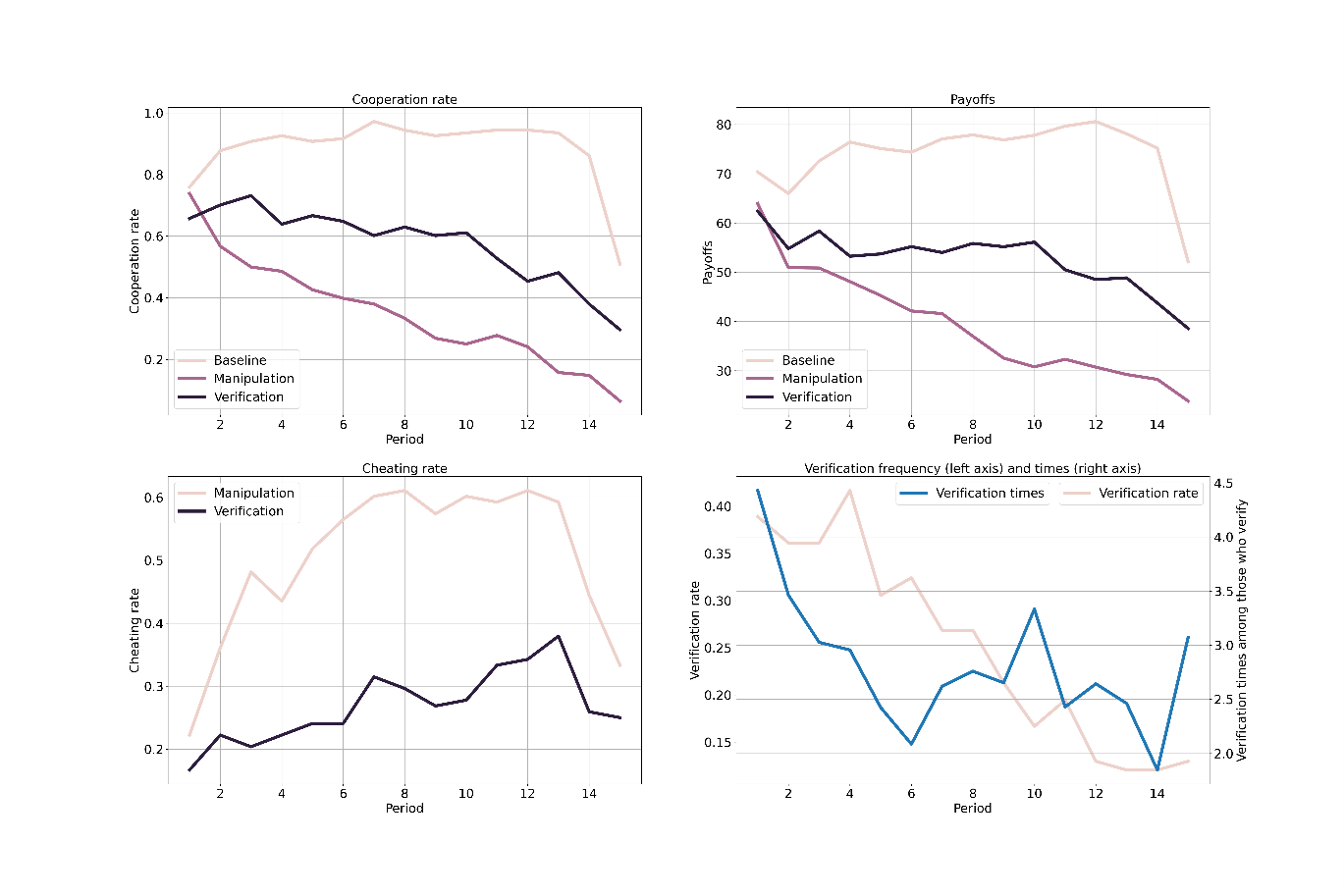

Reputation is a key cooperation-fostering mechanism, both in theoretical models and real-world scenarios (Manrique et al. 2021; Nowak, 2006; Takacs et al., 2021). However, real-life reputation systems are imperfect due to potentially noisy or manipulated information (Antonioni et al. 2016; Hilbe et al., 2018). Here, we experimentally study the robustness of the role of reputation when individuals can manipulate their public image and test to what extent the possibility to verify the accuracy of public information reinstates cooperation. As a control situation, we employ a standard repeated experimental protocol that combines reputation and network reciprocity, allowing people to observe others’ past behavior and decide jointly with whom to interact and whether to cooperate or defect against all their network neighbors. In line with previous evidence, the level of cooperation is high and stable in this framework. In our first treatment condition, people can manipulate public information about their last action at a cost. Cooperation rates decline rapidly to zero in this framework, as about half of the participants engages in reputation manipulation. In our second treatment, in which subjects can both manipulate their public reputation but also verify others’ reputations at a cost, neither the level nor the stability of cooperation recover fully. Rather, cooperation emerges to some extent but declines over time. These findings call for further investigation of the underlying mechanisms that contribute to the vital yet complex role of reputation systems in human societies.

Summary of key experimental outcomes over time. Left-upper panel: cooperation rate, right-upper panel: payoffs, left-lower panel: manipulation rate, right-lower panel: verification rate and intensity. Note: Verification rate is the percentage of individuals who verified at least one neighbor (extensive margin). Verification times is the average number of neighbors verified by those who verified at least one neighbor (intensive margin).

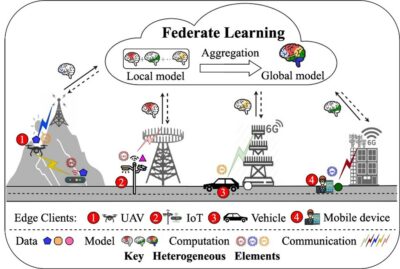

With the rapid advancement of 5G networks, billions of smart Internet of Things (IoT) devices along with an enormous amount of data are generated at the network edge. While still at an early age, it is expected that the evolving 6G network will adopt advanced artificial intelligence (AI) technologies to collect, transmit, and learn this valuable data for innovative applications and intelligent services. However, traditional machine learning (ML) approaches require centralizing the training data in the data center or cloud, raising serious user-privacy concerns. Federated learning, as an emerging distributed AI paradigm with privacy-preserving nature, is anticipated to be a key enabler for achieving ubiquitous AI in 6G networks. However, there are several system and statistical heterogeneity challenges for effective and efficient FL implementation in 6G networks. In this project, we investigate the optimization approaches that can effectively address the challenging heterogeneity issues from three aspects: incentive mechanism design, network resource management, and personalized model optimization. This is a collaborative project with China Mobile.

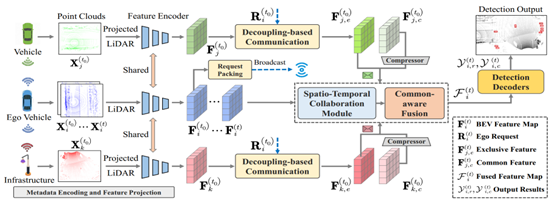

Multi-agent collaborative perception as a potential application for vehicle-to-everything communication could significantly improve the perception performance of autonomous vehicles over single-agent perception. However, several challenges remain in achieving pragmatic information sharing in this emerging research. Accordingly, we propose SCOPE, a novel collaborative perception framework that aggregates the spatio-temporal awareness characteristics across on-road agents in an end-to-end manner. Specifically, SCOPE has three distinct strengths: i) it considers effective semantic cues of the temporal context to enhance current representations of the target agent; ii) it aggregates perceptually critical spatial information from heterogeneous agents and overcomes localization errors via multi-scale feature interactions; iii) it integrates multi-source representations of the target agent based on their complementary contributions by an adaptive fusion paradigm. To thoroughly evaluate SCOPE, we consider both real-world and simulated scenarios of collaborative 3D object detection tasks on three datasets. Extensive experiments show the superiority of our approach and the necessity of the proposed components. The corresponding work is published in 2023 IEEE/CVF International Conference on Computer Vision (ICCV2023). The following is the citation information.

Despite advancements in previous approaches, challenges remain due to redundant communication patterns and vulnerable collaboration processes. To address these issues, we propose What2comm, an end-to-end collaborative perception framework to achieve a trade-off between perception performance and communication bandwidth. Our novelties lie in three aspects. First, we design an efficient communication mechanism based on feature decoupling to transmit exclusive and common feature maps among heterogeneous agents to provide perceptually holistic messages. Secondly, a spatio-temporal collaboration module is introduced to integrate complementary information from collaborators and temporal ego cues, leading to a robust collaboration procedure against transmission delay and localization errors. Ultimately, we propose a common-aware fusion strategy to refine final representations with informative common features. Comprehensive experiments in real-world and simulated scenarios demonstrate the effectiveness of What2comm. The corresponding work is published in the 31st ACM International Conference on Multimedia (MM 2023). The following is the citation information.

Medical imaging modalities such as MRI, CT and PET provide visualization and quantification of different disease and health properties of human body. The quality of medical images can directly influence the accuracy of disease diagnosis and treatment. One of recent research projects in Dr. Lei Zhang’s lab is on the development and evaluation of novel image processing methods in minimizing MRI metal artifacts of human brain. By combining three types of MRI images, the underlying biophysics properties of human brain (T1, T2, and PD) can be determined and further used for synthesizing metal artifact resistive high contrast MRI images. Two aspects of the brain image quality: metal artifact and image contrast are simultaneously improved. This brain image quality enhancement can contribute to the enlargement of applicable population of brain MRI study, and the higher accuracy and precision of brain disease diagnosis and treatment.

Figure 1. Metal artifact reduction and image contrast enhancement of human brain MRI

This study conducted a literature review for green certifications and analyzes a long-term data set for the first LEED certified higher education buildings in China. Given the ambient air quality parameters in China, we assess air quality parameters to determine and whether achieving LEED recommended standards in operations and maintenance is possible, based on seasonal extremes.

Air quality data including CO2, TVOC, PM2.5, temperature and humidity from 2 gold and three silver LEED certified buildings was provided. Data was in minute intervals or hourly averages over a two year period. Outside air quality parameters from the building site as well as an outside air quality parameters from a regional site were correlated with environmental data. This is with the goal of developing predictive models for building air quality to support the health and well-being of occupants.

The figure depicts PM2.5 outdoor on camps, in the HVAC system and in each of the buildings. This graph shows mean PM2.5 concentration levels.

The purpose of our project is to address the pressing concerns around data privacy while leveraging the potential of intelligent devices and massive data in our increasingly connected world. Traditional data-driven AI technologies often require collecting and centralizing data from local devices to a central data center (cloud), which raises not only communications latency but also significant data privacy concerns, such as data misuse and leakage. Our FedCampus project addresses this challenge by creating a privacy-preserving health data platform for smart campuses. Leveraging techniques such as differential privacy, federated learning, and federated analytics, FedCampus effectively balances the delicate trade-off between personal data privacy and decentralized data analytics from smartwatches and smartphones. We have successfully launched our project at Duke Kunshan University. This is a collaborative project with Huawei Health.

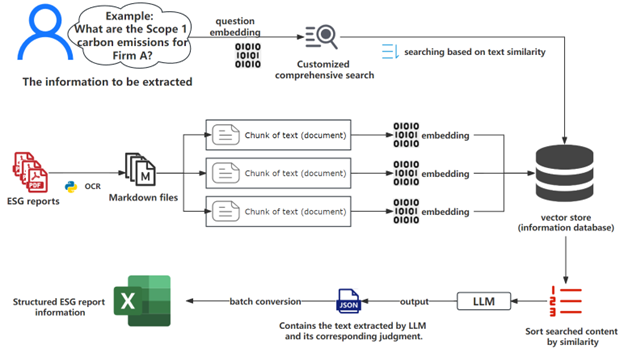

The qualities of environmental, social, and governance (ESG) reporting vary widely among publicly listed companies in China. Only a portion of companies are mandated to provide ESG reports, with inconsistent content and format across entities. This disparity creates a major challenge for investors and researchers because of the difficulty in deciphering unstructured ESG reports. Moreover, acquiring ESG data is more resource-demanding than financial reports and reading ESG reports by different people might lead to significant bias. To address these challenges, we evaluate the quality of corporate ESG information disclosure by leveraging the capabilities of large language models like GPT. Specifically, we have designed two models to allow for the best possible results:

report disclosure.

By strategically utilizing these models tailored to specific evaluation tasks, we aim to streamline the process of assessing ESG reports. This ensures accuracy and relevancy in information extraction and provides a standardized approach, reducing biases inherent in manual reading and offering nuanced insights tailored to specific industries. The value of this solution lies in its ability to make ESG reporting more accessible and understandable, ultimately benefiting investors and researchers.

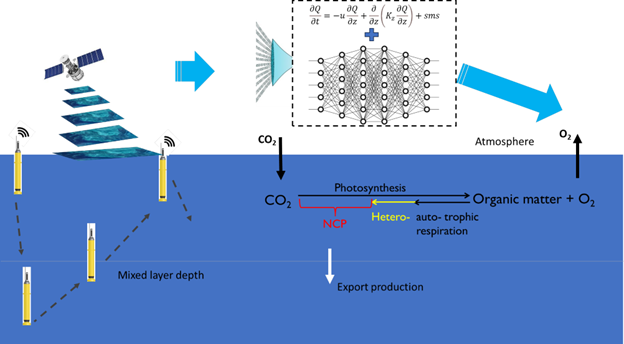

Photosynthesis in excess of respiration at the ocean surface leads to the production of organic matter, part of which is transported to the deep ocean through sinking and mixing. This biological process, also known as the biological carbon pump, lowers carbon dioxide (CO2) concentrations at the ocean surface and facilitates the flux of CO2 from the atmosphere into the ocean. However, its estimates and forecasting carry large uncertainties due in part to data scarcity. We fill the gaps by developing neural differential equation models for estimating and understanding the biological carbon pump and dynamics of the ecosystem using heterogeneous big data from autonomous platforms, satellite remote sensing, and process model.

0512-36657577

No.8 Duke Avenue, Kunshan, Jiangsu, China, 215316